To Reply by @Victor Stafusa is excellent, I come here only to deepen some points.

Parlance

One language is a subset (finite or infinite) words generated by the operator Kleene star on a set of symbols.

Word

A word is the concatenation of 0 or more symbols.

A word with zero symbols is the empty word. It can be represented by Epsilon or lambda, or simply by "".

Lambda:

Epsilon:

A single symbol is considered a word of size 1. Two concatenated symbols is a word of size two.

It is possible to concatenate any desired word, even the empty word. I will represent the concatenation with the point operator .. Some examples of concatenation:

"a" . "b" -> "ab"

"ban" . "ana" -> "banana"

"" . "" -> ""

"" . "a" -> "a"

"a" . "" -> "a"

Formally, every element formed by the Kleene star on a set of symbols T is a word made up of T.

Kleene star

A Kleene star generates concatenations of words over a previously existing set of words.

But, Jefferson, you spoke just before that a language is a subset about the set generated by the Kleene Star on symbols, why are you saying that the Star can operate on words?

Remember it was not long ago that I wrote about words of size 1? Well, a single symbol is a word of size one, so these definitions don’t contradict.

Kleene’s star operator is about a set V is represented by V*

A word is generated by the star of Kleene about V if:

- she is the empty word;

- she is a word of

V concatenated from a word generated by V*.

Yes, the definition is recursive. More formally, the second step is given by the following mathematical expression:

Language as a whole

Parlance L is a subset of T*, being T a set of symbols. As a set, I can define several ways. For example:

This is a word made up of symbols a, b and c, so that all the acome before all the b, which in turn appear before the cs. It also has the same amount of as, bs and cs.

For some cases, the language needs to follow a structure, called syntax. To define a syntax, use a grammar. Unfortunately, the set notation is poor to express the syntactic structure of a language, so we have another notation for this...

Derivative grammar

Derivative grammar, or transformational grammar, was proposed by Chomsky to study in a structured way how phrases form in natural language.

A grammar is defined as a set of symbol transformations. Usually, we have a transformation defined like this:

V -> W

Where V is a lexical form and W another lexical form.

What is a lexical form?

Let’s go back a little to Portuguese, our language. A reduced version of sentence formation would be:

- Generally speaking, we have a phrase

F

F can be described as SVO, where S is a guy, V is an adverbial phrase and O is an objectS is described by a nominal phrase Sn- as well as

O is also described as Sn

- A

Sn can be directly a name N, but it can also be a nominal phrase combined with an adnominal adjoint AN before or after another Sn

- Therefore, a

Sn can be represented by N, Sn . An or An . Sn

- A

An is an adjective Ad which can be combined by an adverb Av in the same way that a nominal phrase is combined with adnominal adjoints

- Therefore,

An can be represented by Ad, An . Av or Av . An

- Similarly, verbal phrases are verbs

Ve or combinations of verbal and adverb phrases: V is represented by Ve, V . Av or Av . V

N is the union of morphological classes of nouns Su and pronouns PSu, P, Ve, Ad and Av are the grammar classes we study in school

I can turn these rules into a grammar as follows:

F -> SVO

S -> Sn

O -> Sn

Sn -> N | An Sn | Sn An

N -> Su | P

An -> Ad | Av An | An Av

V -> Ve | Av V | V Av

A lexical form is any concatenation of symbols, and may contain intermediate and final symbols in any proportions. Examples of a lexical form:

F

"verde"

Sn

"incolores" . "ideias" . "verdes"

Sn . V . Sn

"incolores" . Sn . Ad

"gato" . "amarelas"

A word is a special lexical form that is composed only of final symbols. Therefore, from the above examples, only the following are words:

"verde"

"incolores" . "ideias" . "verdes"

"gato" . "amarelas"

Note that the grammatical structure does not need to make the generated word make sense in the language used, because there is no semantic evaluation, only syntactic. An example of nonsense is having something ("ideas") that has a color ("green") and has no color at all ("colorless"").

The language generated by a grammar is the set of all possible words that it is possible to generate with the grammar starting from an initial symbol; this language is indicated as L(G).

Classification of grammars

As Victor Safusa has already said, there are several types of grammars. They are differentiated in how each production is made.

A context-sensitive grammar has the following form:

A V B -> A W B

A B -> B A

V -> Z

Note that V turned into W given the context A prefix and B suffixed. On the other hand, note that V turn Z has no context involved. Production V -> Z is therefore said to be context-free.

A context-free grammar is a grammar whose productions are all context-free. To be context-free, the left side (producer?) may only have a single non-terminal symbol, with the right side being free (produced?).

As a curiosity, a regular grammar follows the following form, to V and W non-terminal symbols and a any terminal symbol:

V -> a W

V -> a

V ->

In chapter 7 of my Monograph of Graduation, I used Context-Sensitive Grammars to demonstrate some simulation properties of petri net.

Languages free of context

A language is context-free if it can be described by a context-free grammar. Simple as that, it is not?

Well, then, for it not to be free of context, it is necessary to demonstrate that it is not possible to build language, even with all the infinite possible productions.

For a grammar to be infinite, one thing must be true: a nonterminal symbol must produce itself. Grossly, it would be something like this (always generating the word from the symbol in parentheses):

S -> A(B)C -> AXT(Z)LC -> ... -> AXTPQO S AHFUQLC

Of the symbol S, reached S again, preceded by AXTPQO and succeeded by AHFUQLC. That means I can also get lexical expression AXTPQO AXTPQO S AHFUQLC AHFUQLC, and also the AXTPQO AXTPQO AXTPQO S AHFUQLC AHFUQLC AHFUQLC, and generally (AXTPQO)^n S (AHFUQLC)^n. This operation (exit from Sand get into S again) is called pumping.

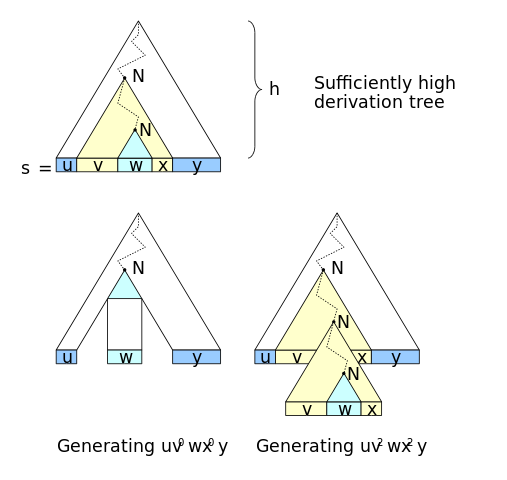

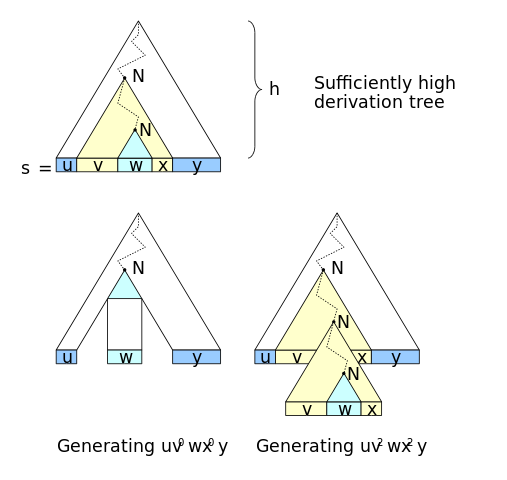

Visually, there’s this image of motto of pumping in Wikipedia showing the generalization of the previous paragraph (N pump N):

Every context-free language follows this pumping motto when infinite.

EDIT An alternative name given to this pumping production is self-nesting; N is nestled within the productions of N

Direct answers

What is a context-free language?

A language generated by a context-free grammar.

How is it possible, if it is possible, to define whether a language is context-free or not?

In general, it is enough to prove by writing a context-free grammar, but this task is not always trivial.

There are cases where it is impossible to know whether a context-sensitive language is context-free. Even proving that a language is not context-free is difficult, because even languages that respect the pumping lemma may not be context-free.

Talks about programming languages?

– gato

@cat programming languages and compilers.

– viana